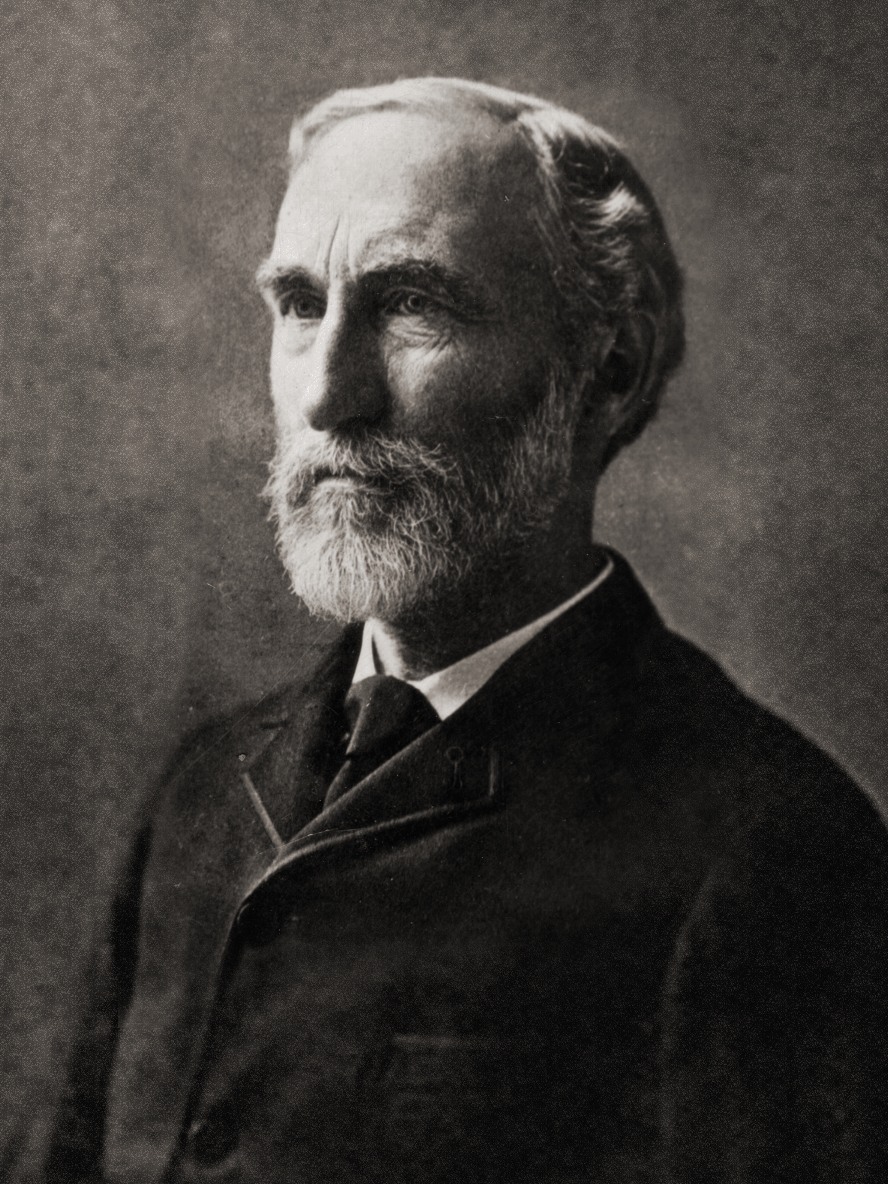

J C Maxwell (this is gonna be another of those black and white men posts...)

I've been collecting some thoughts recently about statistical mechanics, after repurchasing some old books. There are several approaches to statistical mechanics, which can have different seeming implications. I am not going to attempt to carefully delineate them, theorems from one may be used in another. I am going to just list a few thoughts, no real substance yet, but maybe in the future there will be more. There's tons to think about.

R Clausius

The first and oldest is no interpretation at all. More to the point, in the original purpose of statistical mechanics was to get classical thermodynamics out of Newton's Laws. One could avoid this, try to derive thermodynamic relations by presuming that they can be derived by working with analytic free energy functions. These functions are tractable and have beautiful and suggestive visualizations. This technique was used by James Clerk Maxwell and the earlier Gibbs - Gibbs's massive monograph "On The Equilibrium Of Heterogeneous Substances" is basically a formalization of this method. This method is also covered in many an engineering textbook. One could try to stick to these guns, but there are severe limitations on this method. As outlined here, this theory gives poor result when it comes to phase transitions and other physically important phenomena. In addition to it's limitations, it gave a somewhat false view of it's theorems. For instance, Maxwell thought that the early, static approach to the second law of thermodynamics was necessary to avoid perpetual motion. But I've discussed this before.

Inspired by his work on Saturn's rings and with an eye on extending preliminary results due to people like Bernoulli and especially Clausius pictured above. Instead of trying to use global results (more on this later), Maxwell concentrated on the whole distribution. Conceptualizing a gas as being made of hard spheres that impart velocity on each other. Maxwell showed that there was only one stable distribution with certain symmetry properties (there have been other derivations since). By considering the average case, Maxwell was able to develop statistical laws. Thus was the birth of statistical mechanics.

L Boltzmann

The first of the three big approaches I'll call the Boltzmann approach. Boltzmann was a physicist who worked in what he felt to be the tradition of Maxwell (in several of his polemical papers, he uses the Maxwell name as a weapon). I do think his thinking was along these lines, but I wouldn't mind being informed of differences. Boltzmann's approach was based on very large assemblages. This forces the dimension of the subject very high, allowing one to use concentration of measure results to argue that the system will spend almost all of its time in a specific state. Concentration of measure results are direct rather than asymptotic, making them useful for machine learning (hint hint!). A quick introduction to concentration of measure can be found here. The dynamics are important (frequently, Markov Models are used or found), but not as central as the concentration of measure results. One can use these methods to find concentration of measure results, which makes this approach really interesting to me.

Because the measure of the actual system really is concentrated and only the actual system enters into the result, this approach is very objective. For instance, entropy is a semi-objective property. Entropy is the count of microstates compatible with the observable macrostate (some subjectivity comes in how we define microstates). There's no obvious connection to information theory in this approach, and being objective has nothing to do with Bayesianism. In this approach, ensembles are a calculation device justified by ergodic theorems and equilibrium arguments

J W Gibbs

The second of the approaches I will call the Gibbs approach. This approach is ensemble based. Instead of just considering the actual system, every possible system - subject to certain constraints. Entropy is the spread of the ensembles. These constraints are often more about our measuring systems than the system itself, which leads to subjectivity. That entropy is subjective makes this sit well with Bayesian approaches. Bootstrapping and other resampling methods have their origin in this kind of thinking.

Again, though dynamics can be important, they are secondary to information theoretic arguments. Arch-Bayesian Edwin Jaynes is famous for his dogmatic, chapter and verse defense of this approach. How does the use of ensembles sit with the supposedly Bayesian likliehood principle? Aren't we supposed to only consider the actual results? I don't know.

I don't know how the Gibbs approach relates to the Boltzmann approach, especially out of equilibrium. Sorry.

H Poincare

The third approach is the dynamical systems approach. This approach is very, very powerful and underlies the others. However, it basically never has answers. As an example, it was 103 years before even one dynamical system was directly found that obeys the above mentioned Maxwell distribution. At the time of Maxwell and Boltzmann this was the uncontroversial foundation of all things, but these days quantum mechanics and quantum field theory are known to be more basic. The first big result in this field is Liouville's Theorem. Other important results are proofs of chaotic behavior, ergodic theorems, etc. I've talked about my favorite learning device for learning this theory in this post. This is the best approach if you like your science extremely rigorous and moving at the speed of pitch.

Personal Note: had a conversation about application of Kelvin Inversion to economics and statistics (!!!) can't remember a single thing. Look for this later.

No comments:

Post a Comment